Recently I ran into an interesting problem where I had to store the height and width of uploaded files in Database.

Now, I had used carrierwave to upload the files to S3. There is a simple shell based command to find out the dimensions of an image with help of imagemagick:

`identify -format "%wx %h" #{img}`.split(/x/).map { |dim| dim.to_i }The above is a system command and you just have to run it from ruby shell.

Now the catch is that the image ( img ) should be on your local disk, and the files in my case were on S3. So I came up with the below method to download the files first to local, process them and delete them afterwards as the downloaded files could cover a lot of disk space if left.

# method to download files from remote

def download_file_from_url(object)

id = object.id

file_path = object.image.url # S3 (remote) url of the file

# conditionally create a folder by id if it doesn'y already exist

temp_path = Rails.root.join('tmp', id.to_s)

Dir.mkdir(temp_path) unless Dir.exist?(temp_path)

file_name = file_path.split('/').last # name to store the downloaded file as

open("#{temp_path}/#{file_name}", 'wb') do |file| # call open method of kernel

file << open(file_path).read # store the remote file in the temp file

end

"#{temp_path}/#{file_name}" # return the local file path

end

Image.find_each do |image|

img = download_file_from_url(image) # get local path

width, height = `identify -format "%wx %h" #{img}`.split(/x/).map { |dim| dim.to_i } # perform identification

`rm -r #{Rails.root.join('tmp', image.id.to_s)}` # remove the downloaded file

image.update(width: width, height: height) # use the needed dimensions

end

Now this process can be a bit heavy, depending on how many images you want to process and one may not want to sit idle while all this happens. We can move the entire thing above to a background worker, like sidekiq.

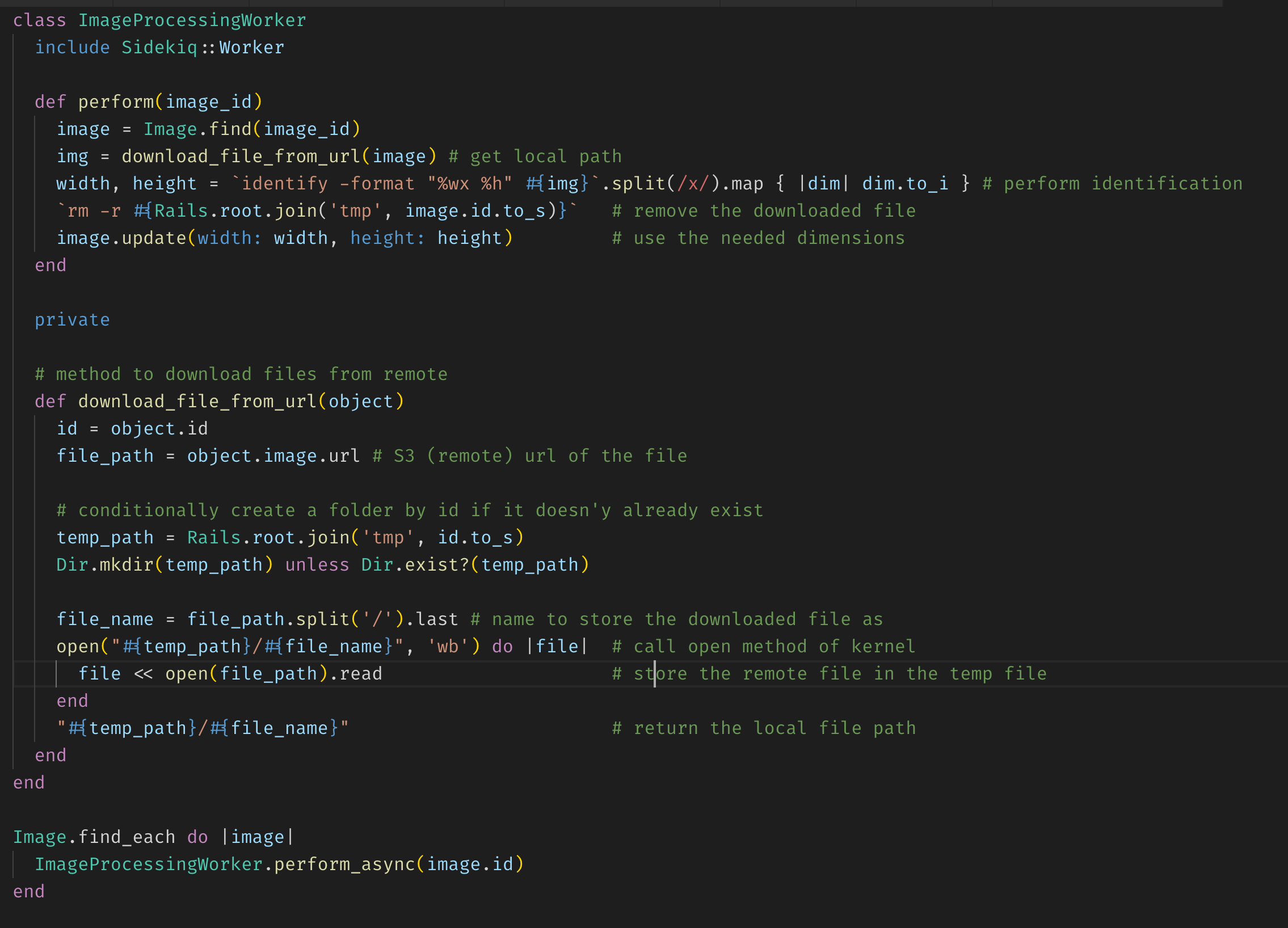

The above code should look something like below afterwards:

class ImageProcessingWorker

include Sidekiq::Worker

def perform(image_id)

image = Image.find(image_id)

img = download_file_from_url(image) # get local path

width, height = `identify -format "%wx %h" #{img}`.split(/x/).map { |dim| dim.to_i } # perform identification

`rm -r #{Rails.root.join('tmp', image.id.to_s)}` # remove the downloaded file

image.update(width: width, height: height) # use the needed dimensions

end

private

# method to download files from remote

def download_file_from_url(object)

id = object.id

file_path = object.image.url # S3 (remote) url of the file

# conditionally create a folder by id if it doesn'y already exist

temp_path = Rails.root.join('tmp', id.to_s)

Dir.mkdir(temp_path) unless Dir.exist?(temp_path)

file_name = file_path.split('/').last # name to store the downloaded file as

open("#{temp_path}/#{file_name}", 'wb') do |file| # call open method of kernel

file << open(file_path).read # store the remote file in the temp file

end

"#{temp_path}/#{file_name}" # return the local file path

end

end

Now just type the below command to trigger the above worker for all images, sit back and relax!

Image.find_each do |image|

ImageProcessingWorker.perform_async(image.id)

end